Belgian CVD is deeply broken

A bit of background #

A few months ago, I discovered a vulnerability in the online platform of Belgium’s second largest bank. Hoping to get it fixed, I reported the issue to both the bank itself and the Centre for Cybersecurity Belgium (CCB), following the principles of Coordinated Vulnerability Disclosure (CVD). Unfortunately, the experience was far from ideal. In the end, both the CCB and the bank denied that any vulnerability existed and seemed to assume that I must have committed a crime in order to discover what I reported. They kept implicitly threatening me with legal consequences.

For months, I was thinking about what the right thing to do would be, and I ended up not doing anything. However, very recently, I came across the blog post “Belgium is unsafe for CVD”, by Floor Terra, which finally gave me the motivation I needed to write up my experience in this blog post.

In his post, Floor highlights how little protection Belgian law offers to vulnerability reporters, and how the legally mandated secrecy is impractical and counterproductive. In this post, I’m going a step further: I believe the Belgian system for CVD is fundamentally broken on every level: the law, the CCB, and the affected companies (or at least the ones I dealt with). One reason I delayed writing this post is because I know this message won’t sit well with everyone. However, the issue is too important to remain silent. Hopefully, if enough people speak up, change will follow.

In case you are reading this blog post, and find yourself feeling unhappy with what I wrote, please, get in touch; let’s work together to make CVD in Belgium better.

With that said, let’s get into the actual story.

Finding and reporting the vulnerability #

For a while, every time I logged into KBC’s eBanking portal, I had the feeling that the login system wasn’t very secure. One day, I decided to test that idea. I ran a simple proof-of-concept attack, and to my surprise, I was able to bypass the login system with almost no effort. You can see a demonstration of the attack below.

Shortly after this article was published, itsme contacted me to discuss the issues I raised. The initial conversation was constructive, and I am optimistic that a mitigation is on the way. Therefore, I’ve temporarily taken down the video that shows how to perform the attack.

In high level terms, the attack is possible because of insufficient binding between the KBC browser session and the itsme authentication app.

As shown in the video, someone who knows a person’s phone number and thinks they might be a KBC customer has a good chance of getting into their bank account. All it takes is a short phone call. For example, asking them to check their balance or confirm a payment. There are many ways to come up with a convincing reason. This becomes even easier when the attacker is close to the victim. For example, imagine a business partner asking: “did you receive the payment?”

This attack is so simple that it almost makes you question whether it’s a vulnerability at all. Maybe the system is meant to work this way? In a sense, that’s true. What I discovered isn’t an implementation or a configuration flaw, but a design flaw.

An authentication system should ensure that the right person is performing an action. The login system used by KBC clearly fails at this: It allows someone else to log in, even when the actual account holder never meant to allow that. A proper authentication system should be designed to prevent exactly these types of incorrect authorizations. That is its main job. Thus, even though KBC’s system might work exactly as designed, it is still vulnerable!

Implementing mechanisms to protect against this type of attack is standard practice in the banking industry. In fact, there are many simple and effective prevention measures available. For example, requiring the user to enter a password or a one-time code directly into the browser, or asking them to scan a QR code with their authentication app. Strangely enough, even KBC’s login system used to do this correctly, until they switched to the current itsme-based1 login mechanism. That change thus appears to have removed an essential layer of protection.

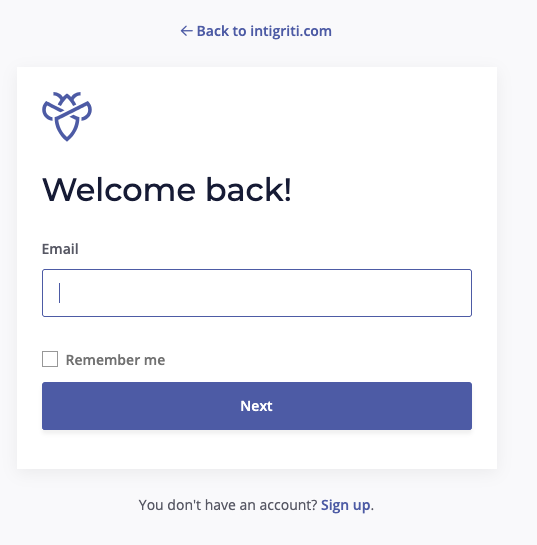

Once I was certain that I found a vulnerability, I wanted to report it responsibly. I searched for “KBC responsible disclosure” and found their responsible disclosure policy. The policy says that all vulnerabilities must be reported through KBC’s public program on the Intigriti platform. I clicked the link, and was greeted by …

… a login form. That seemed a bit odd, but I wanted to do the right thing, so I signed up on the Intigriti platform and verified my email. After I did that, I was greeted by …

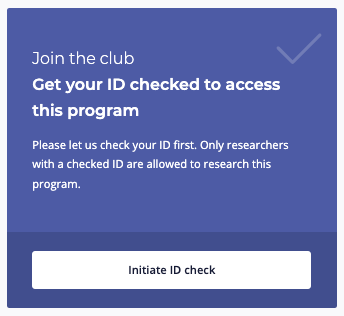

… a request to have my government ID checked.

This made me uncomfortable. I just wanted to inform KBC about a vulnerability in their system. Why would I need to go through an ID verification? I also noticed something in KBC’s responsible disclosure policy:

In no circumstance can you make anything related to the investigation public unless to the extent required by law;

And on KBC’s program page on Intigriti, it clearly states: “This is a responsible disclosure program without bounties.”

Now, I wasn’t looking for a reward. I simply wanted to help by pointing out a serious issue. But I started to feel like KBC was asking a lot from security researchers: ID verification, strict confidentiality, but nothing in return.

Not wanting to upload my ID, I looked for another option. I searched for “KBC CERT” and came across the KBC Computer Emergency Response Team page. Even better: the page listed a direct email address: cert@kbc.be. Perfect!

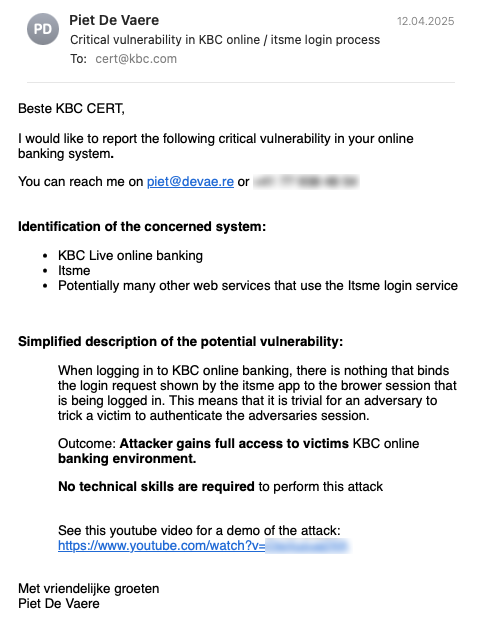

So I wrote and sent the following email to KBC.

Since KBC uses the itsme platform for user authentication, it wasn’t clear to me whether the issue lay with KBC or with itsme. Given that itsme is widely used across Belgium, I wanted to report the issue to them as well. However, after quite a bit of searching, I wasn’t able to find any useful contact information. Strange, given how critical itsme is to the Belgian economy.

Reporting to the CCB #

However, I remembered that the Centre for Cybersecurity Belgium (CCB) has its own CERT, and I found this page on vulnerability reporting. Since banks are considered critical infrastructure, I decided it was important to notify the CCB as well. I sent them a message similar to the one I had sent to KBC.

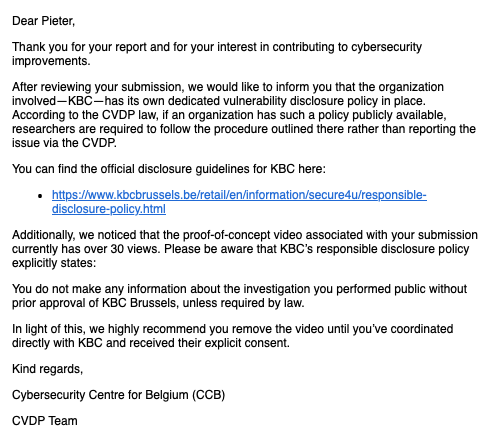

A couple of days later, I received a response:

The response from the CCB essentially said two things. First, they claimed that because KBC has a responsible disclosure policy2 in place, Belgian law states I should not contact the CCB. This is incorrect, and I will explain why in a moment. Second, they asked me to remove the demo video from YouTube.

Notably, their message did not address the actual content of my report. They did not seem particularly interested in the vulnerability itself. They also got my name wrong, which did not inspire confidence.

I replied promptly to clarify a few things. I told them I had already reported the issue to KBC. I explained that I did not want to upload a copy of my government ID. I mentioned that the vulnerability might affect more organizations than just KBC. I also pointed out that the YouTube video was unlisted, so it had not been made public.

Later that evening, I decided to take a closer look at the Belgian legislation on Coordinated Vulnerability Disclosure (CVD). I found that CVD is covered in Articles 22 and 23 of Belgium’s NIS2 implementation law (a.k.a. the “law of 26 April 2024 establishing a framework for the cybersecurity of network and information systems of general importance for public safety”).

Article 22 describes the responsibilities of the CCB. It states that the national CSIRT (i.e., the CCB) should act as a trusted intermediary between reporters and affected organizations. It also clearly says that any natural or legal person may report a potential vulnerability to the national CSIRT. Furthermore, it states that the CSIRT must ensure that reported vulnerabilities are properly followed up.

Contrary to what the CCB claimed, nowhere does the law state that I should not report vulnerabilities to the CCB if a CVD policy is in place.

Article 23 then lists a bunch of conditions, which if followed, grant vulnerability reporters legal immunity from certain computer-related crimes (think proper “hacking”). Some of the listed requirements on reporters are:

- “they did not disclose information about the discovered vulnerability or affected systems without the consent of the national CSIRT [i.e., the CCB]”; and

- “they promptly, and no later than 24 hours after discovering a potential vulnerability, submitted a simplified notification identifying the relevant system and providing a basic description of the potential vulnerability to the organization responsible for the system and to the national CSIRT”.

This is interesting. It turns out that what the CCB told me is not only incorrect, but could actually make me liable to criminal charges. The law clearly states that I am supposed to notify both the affected organization and the CCB. If I fail to do that, I could lose my legal protections.

Interestingly, Article 23 also requires that reporters

- “promptly, and no later than 72 hours after the discovery, submit a full notification to the responsible organization (respecting the reporting procedures set by the organization, if any) and to the national CSIRT.”

In other words, if I do not agree to validate my ID on the Intigriti platform, which is required to follow KBC’s CVD policy, I lose my legal protections. That seems unreasonable. What other hurdles could KBC impose? Under the Belgian CVD framework, they could theoretically require me to comply with any process they choose, with the threat of legal liability hanging over my head.

In any case, I reviewed the list of offenses for which Article 23 could offer legal protection and concluded that none of them applied to my situation. After all, I had only logged into my own eBanking account. That was reassuring, because it meant I was not legally bound by KBC’s disclosure procedure or any confidentiality requirements.

I then sent a short follow-up email to the CCB explaining what I had found. I pointed out that the law required me to report the vulnerability and that the CCB had a legal obligation to support that process. I also explained that I was not relying on the protections in Article 23, but that I would still refrain from making anything public, for now, out of ethical responsibility. Lastly, I asked the CCB to shift their focus to the actual vulnerability, rather than the reporting formalities.

They did not like that.

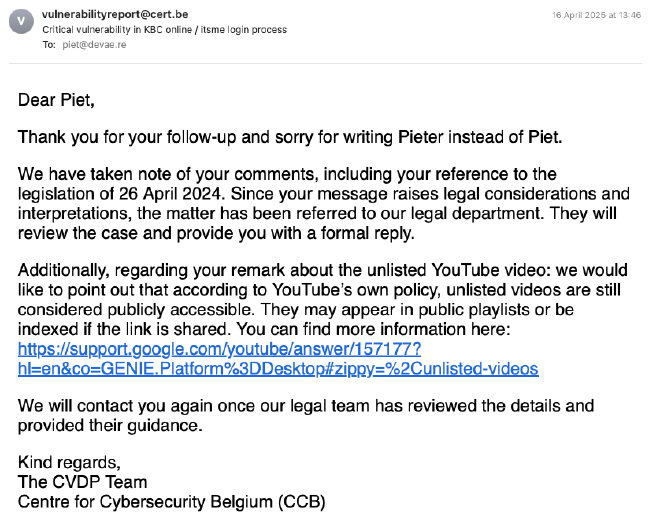

Instead of addressing the actual content of my report, the pointless discussion about its form continued.

The link to Google’s support page contained the following information about “unlisted videos”. For the life of me, I cannot find where it states that “unlisted videos are still considered publicly accessible”.

Trying a softer approach #

My previous approach clearly wasn’t working, and I was thinking about what to do next. Suddenly, I remembered that the head of CERT.be (the part of CCB responsible for CVD) was one of my LinkedIn contacts. I wrote him the following message:

Dear (name redacted),

Since this weekend, I have been trying to report a vulnerability related to Itsme to CERT.be, but unfortunately the process has not been going very smoothly so far. I must admit that this is not entirely due to CERT.be — I could have responded better myself at certain moments.

Still, I would like to work towards a constructive and collaborative approach. Would you be able to look into this matter to see if anything can be done? The reference number is CVDP 402.

Thank you in advance for your time and assistance.

Kind regards,

Piet

A bit later, I received a reply. He explained that the CCB was currently in “priority mode” due to a high volume of incoming reports, but that he would ask the team to take a closer look at mine. I also sent him the YouTube link to the demonstration video, and we ended the conversation on friendly terms.

This actually seemed to help. A couple of days later, I received a message from the CCB saying they had reviewed the issue in more detail. Unfortunately, they did not appear to agree that what I reported was a real vulnerability:

While we understand your reasoning, this scenario inherently depends on phishing techniques and precise timing, rather than a vulnerability in the authentication protocol itself. There is no realistic way for the attacker to know when the victim is about to authenticate without prior social engineering, which moves this into the domain of classic phishing, not a technical flaw.

As I mentioned earlier, I strongly disagree with the claim that this is not a vulnerability. In fact, a flaw in a protocol’s design is arguably more serious than a simple implementation bug. And I’m not the only one who sees it that way.

I studied and currently lecture at ETH Zürich, Europe’s second-highest ranked computer security research institute. Among my friends and colleagues, many of whom are experienced security researchers, everyone I showed the proof-of-concept to immediately recognized the severity of the issue. All of them were surprised that a bank could make such a fundamental mistake in the first place.

Interestingly, the CCB’s message continued to acknowledge the problem, at least to some degree. They added:

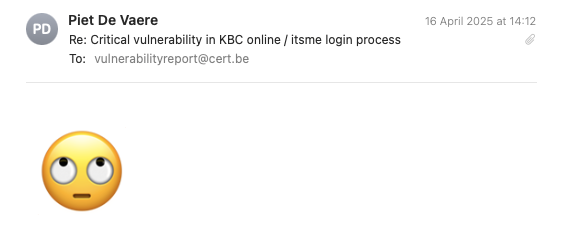

Nonetheless, we discussed your submission internally and revisited the scenario. This specific pattern was reported to us approximately five years ago. The responsible party, itsme®, addressed the issue at the time by implementing a risk-based mitigation mechanism now known as Proof of Possession of Registration (PoPR). One key element of this mechanism is the use of a visual icon challenge during the authentication process, shown when the trust level of the session is considered low (e.g., from an unrecognized browser or device). This forces the user to confirm the session visually and helps protect against unauthorized access attempts.

Interesting! On the website of itsme, I could actually find a description of this mechanism.

Although I couldn’t find any protocol details, this mechanism seems like it could mitigate the vulnerability I discovered. If implemented correctly, of course. So it was good to hear that itsme had implemented this feature. However, in my experience using KBC’s eBanking, I have never seen this “extra security measure” appear.

The CCB wrote the following:

In the video you provided, this challenge is not triggered — indicating the session was likely assessed as trusted. We re-tested the scenario using multiple combinations of devices, networks, and browsers, and consistently received the icon challenge under untrusted conditions.

If you are able to reliably reproduce a bypass of this session trust mechanism — i.e., receive no icon challenge despite using an untrusted environment — we would be glad to investigate further and coordinate with itsme®. In that case, we ask that you:

- Provide a full written report detailing the conditions and steps under which the bypass occurs.

- Remove the video from YouTube, regardless of its unlisted status, to avoid unintended exposure, as unlisted videos may still appear in public playlists or search results (see YouTube policy).

Thank you for your understanding and cooperation.

Sincerely,

The CVDP Team

Centre for Cybersecurity Belgium (CCB)

Hmm, could that be right? During my tests, I used browsers without any stored cookies and connected from a foreign IP address. That should clearly be treated as untrusted. Just to be sure, I ran a few more tests. I tried logging in with an “attacker” using an Indian IP address, and also through the Tor network. Both of these are scenarios that should obviously raise red flags, especially since I was logging in from my home network at the same time. However, the attack worked every time.

To me it seems like KBC has configured their itsme integration not to use this “extra security measure”. Other common protection measures (e.g., impossible travel detection) were also clearly lacking. It’s unclear why the CCB claims the icons were always triggered for them; perhaps they were logging into a different service than KBC?

I reported these findings back to the CCB. As a sign of goodwill, I also let them know that I had changed the YouTube video’s visibility to private.

Unfortunately, I never heard back from them.

Back to KBC #

Surprisingly, throughout all of this, I never received any response from KBC. Not even a confirmation that they had received my report.

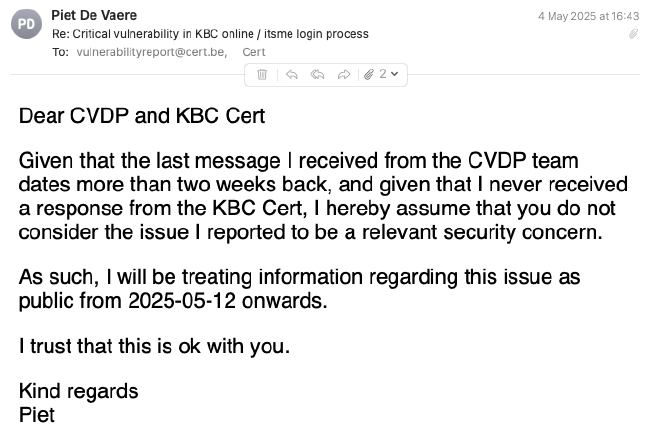

At some point, I decided I had waited long enough. I sent a follow-up email to both KBC and the CCB, hoping to finally move things forward.

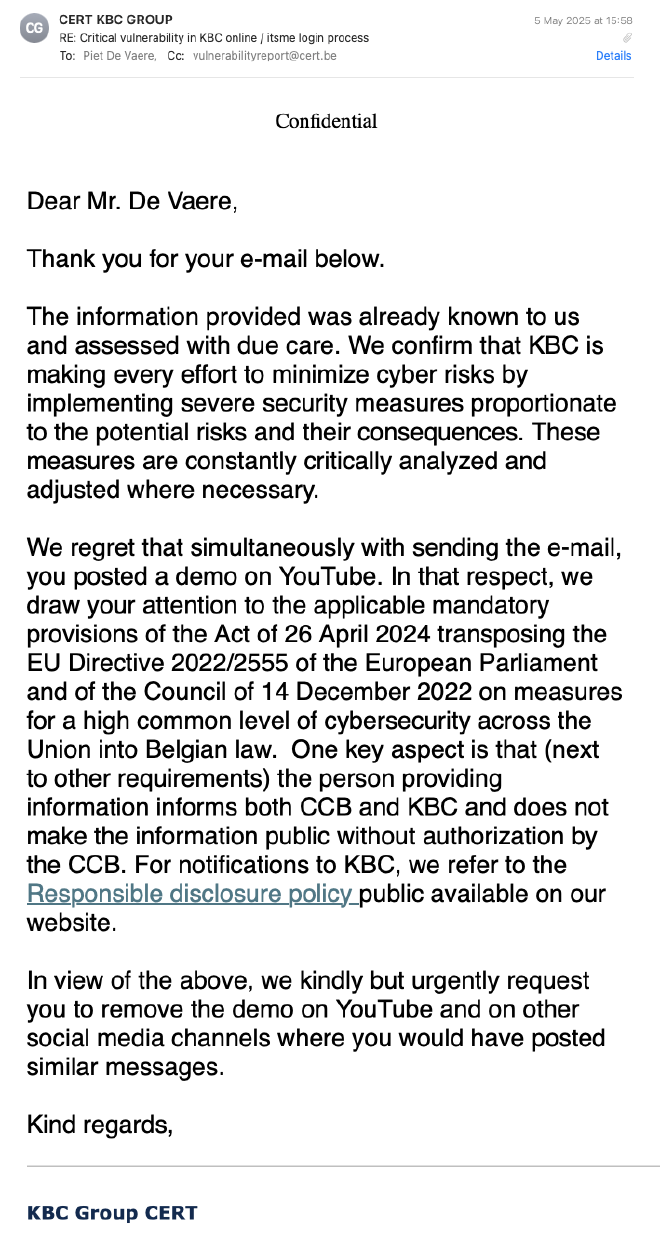

I responded to KBC, and told them the same things that I had already told the CCB: that I had set the video to private two weeks ago (or at least thought I had, see below), that I was not relying on Article 23 for legal protection, and that I still considered this a serious issue. I also pointed them to the EU’s DORA legislation, which puts strict cybersecurity requirements on banks.

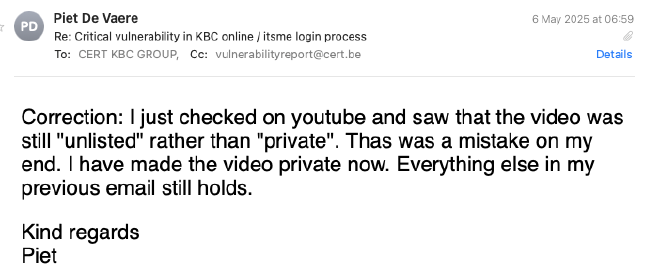

After I sent that email, I checked the YouTube video again and realized it was still set to unlisted, not private. That wasn’t intended. I must have forgotten to confirm the change or made a mistake somewhere along the way.

I quickly sent KBC a follow-up email to explain the mix-up and let them know that I had now correctly set the video to private.

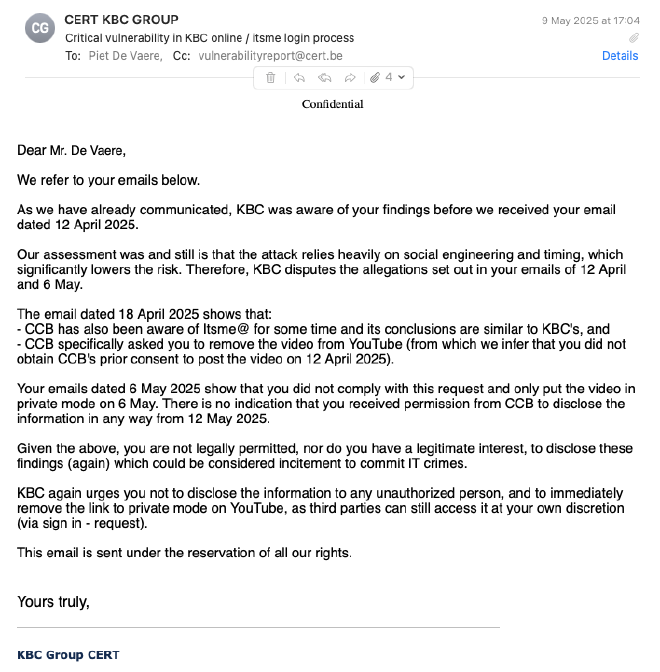

The response from KBC came a couple of days later:

Ufff, more legal back-and-forth. It didn’t seem like anything useful was going to come out of this. I chose not to respond and decided to wait it out.

On the 12th of May, I made the video unlisted again.

The aftermath #

It has now been roughly three months since I first contacted both KBC and the CCB about this vulnerability, and as far as I can tell, nothing has changed. Based on their responses throughout this process, I seriously doubt that either KBC or the CCB intends to address the insecure login mechanism.

However, since then, a couple of things have happened:

-

I spoke with someone who had reported a similar vulnerability a few years ago. This may be the same case the CCB referred to when they mentioned a report “approximately five years ago”. According to this person, that disclosure also did not go smoothly. There were even threats of legal action.

-

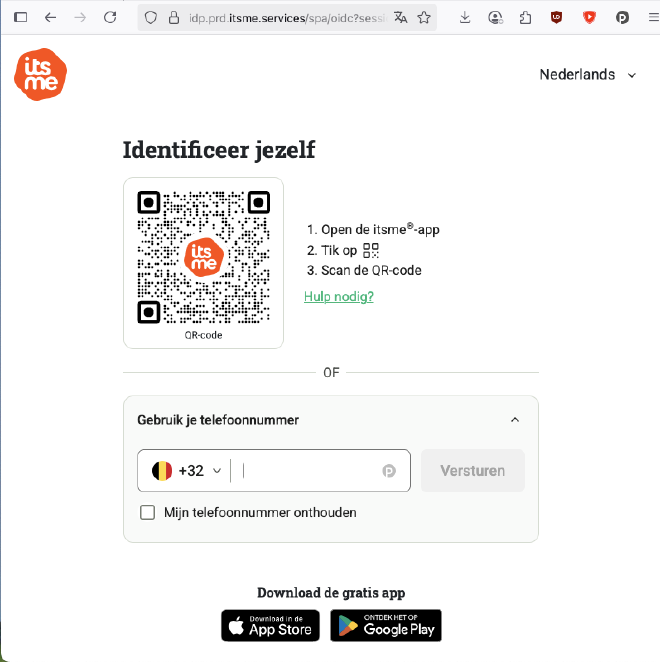

I discovered that itsme actually does support a much more secure login method. This method is based on scanning a QR code, as shown in the screenshot below. Knowing that this more secure option exists makes it even more surprising, and concerning, that KBC continues to use the insecure version.

-

Talking to others with experience in vulnerability reporting in Belgium, I’ve found that my experience is far from unique. Everyone I spoke to described the process as unhelpful, bureaucratic, and discouraging. There seems to be a clear pattern. In particular, it appears that the CCB (almost?) never grants permission to publicly disclose vulnerabilities. Instead, they consistently insist that their procedure must be followed in every case, even when that’s not legally required. This is misleading. As discussed earlier, Article 23 only applies if a crime was committed, and if it wasn’t, the prescribed process is optional. This kind of inflexible approach not only undermines trust but also discourages responsible disclosure. And in the end, that puts everyone at greater risk.

What is next? #

As is broadly recognized in the security community, vulnerability reporting plays a critical role in keeping digital systems secure. Unfortunately, it seems like Belgium’s current ecosystem for Coordinated Vulnerability Disclosure (CVD) is deeply broken. If we want to fix it, we need to make several key changes:

-

Stop treating vulnerability reporters as criminals. Both the CCB and KBC approached my report with the assumption that wrongdoing must have occurred simply because a vulnerability was found. Throughout the process, I faced repeated, implicit, threats of legal consequences. This mindset discourages people from coming forward and ultimately puts systems at greater risk.

-

Ensure a fairer balance between the rights of reporters and affected organizations. The current legislation gives disproportionate power to the receiving parties. Reporters are legally bound to follow whatever process an organization or the CCB imposes, no matter how unreasonable. This needs to change to ensure that good-faith reporting is legally protected and practically feasible.

-

Allow for vulnerabilities to be publicly disclosed. Public disclosure plays a vital role in the CVD ecosystem. It raises awareness, encourages accountability, and motivates organizations to take action. However, under the current Belgian framework, public disclosure is de facto prohibited. The CCB seems to rarely grant permission to disclose, and reporters are expected to remain silent indefinitely, even when a vulnerability remains unaddressed. This undermines transparency and reduces pressure on organizations to fix serious issues.

-

Focus on the content, not the form. Rather than fixating on how a report is submitted or what platform is used, organizations should engage meaningfully with the actual security issue being reported. Wasting time on procedural formalities only delays fixes and damages trust.

-

Upskill the CCB. While it’s difficult to confirm definitively, there are indications that the CCB currently lacks the capacity and expertise to effectively engage with vulnerability reporters. This gap needs to be addressed. The CCB should develop a stronger understanding of hacker and bug bounty culture, and improve its ability to identify and assess relevant vulnerability reports. The Dutch NCSC is reportedly much more mature in this area, so it may be worthwhile to seek guidance and support from our friends in the north.

Oh, and perhaps KBC should fix their authentication flow. As of this writing, their platform is still vulnerable.

-

Itsme is a digital identity app widely used in Belgium to access government services, banks, and similar platforms. It is operated by a consortium of banks and telecom operators. KBC is one of the consortium’s members. ↩︎

-

A responsible disclosure policy is essentially the same thing as a coordinated vulnerability disclosure policy. ↩︎